David Feil-Seifer[File-Cypher]

Professor, Computer Science & Engineering

University of Nevada, Reno

email: dave (at) cse (dot) unr (dot) edu

phone: (775) 784-6469

Research Direction

My research focuses on advancing Socially Assistive Robotics (SAR) to address critical challenges in healthcare and education. By developing robots that provide assistance through social interaction, we aim to augment human caregivers and educators, particularly in areas such as post-stroke rehabilitation, elder care, and support for children with autism spectrum disorders (ASD).

At the Socially Assistive Robotics Group (SARG), we collaborate on projects that push the boundaries of socially assistive robotics. In partnership with the NSF-supported

AI Institute for Exceptional Education (AI4EE), we are developing AI solutions to address the shortage of speech-language pathologists in the U.S. education system. This project includes designing robots capable of interacting with young children to support their speech and language development.

Additionally, we are exploring socially-aware navigation, where robots optimize their movements to be both socially appropriate and goal-oriented. This research is crucial for integrating robots into public spaces, such as museums and schools, where they must interact seamlessly with people.

Our work is driven by the belief that integrating robotics and human-centered design can enhance the quality of life and address critical societal needs. By pushing the boundaries of how robots can assist in various domains, we aim to create technologies that are both effective and socially harmonious.

Current Research

Project Dates: 2023-06-01-

Description: In human-robot collaboration, legible intent of the robot is critical to success as it enables the human to more effectively work with and around the robot. Environments where humans and robots collaborate are widely varied and in the real world are most often cluttered. However, prior work in legible motion utilizes primarily environments which are uncluttered. Success in these environments does not necessarily guarantee success in more cluttered environments...

(more) Project Dates: 2019-06-03-

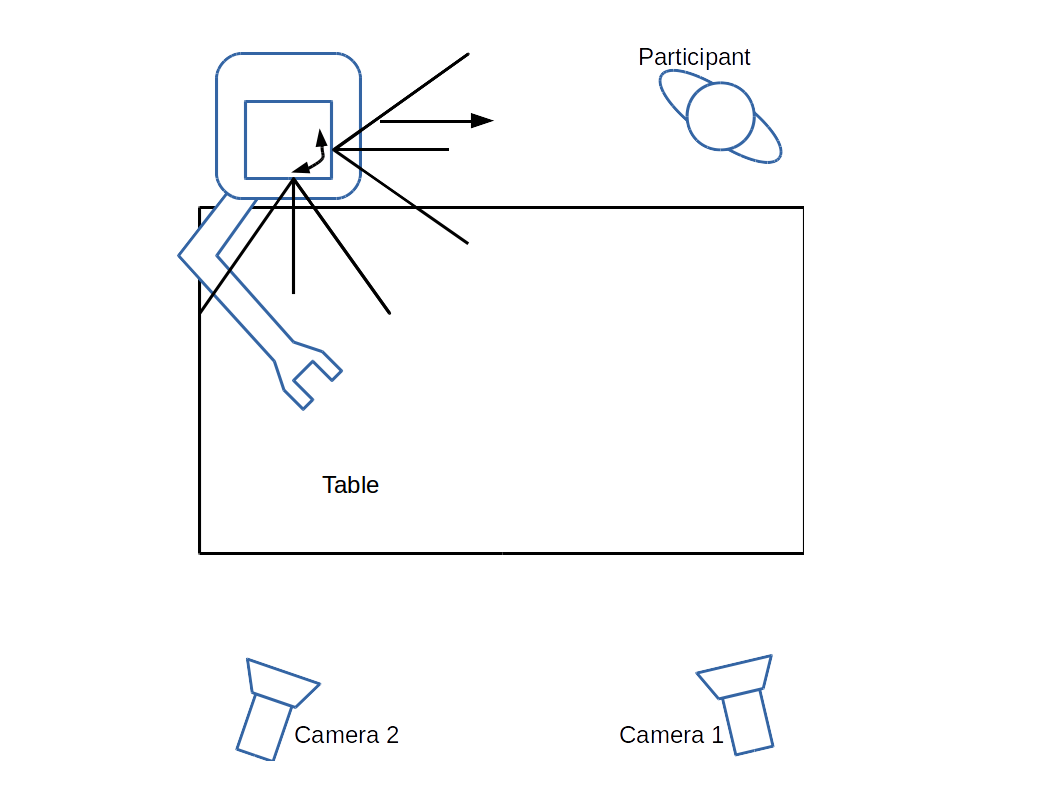

Description: We need to model human behavior for a better understanding of how robots can behave in crowds. To do that, we can to use lasers to track people’s movements during different social interactions. We have developed a system to enable multiple 2-dimensional, stationary lasers to capture movements of people within a large room during social events. With calibrated lasers, we tracked a moving person’s ankles and predicted his/her path using linear regression...

(more) Project Dates: 2017-09-01-

Description: Social intelligence is the ability to interact effectively with others in order to accomplish your goals (Ford & Tisak, 1983). Social intelligence is critically important for social robots, which are designed to interact and communicate with humans (Dautenhahn, 2007). Social robots might have goals such as building relationships with people, teaching people, learning something from people, helping people accomplish tasks, and completing tasks that directly involve people’s bodies (e.g., lifting people, washing people) or minds (e.g., retrieving phone numbers for people, scheduling appointments for people). In addition, social robots may try to avoid interfering with tasks that are being done by people...

(more) Project Dates: 2017-09-01-

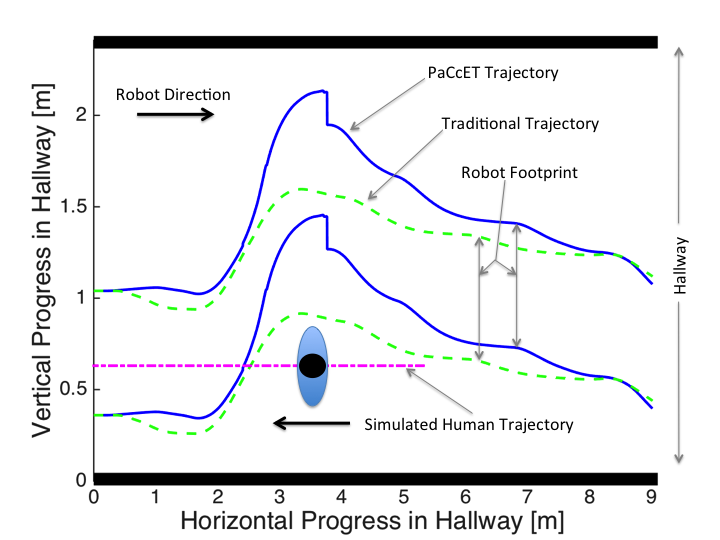

Description: As robots become more integrated into people's daily lives, interpersonal navigation becomes a larger concern. In the near future, Socially Assistive Robots (SAR) will be working closely with people in public environments. To support this, making a robot safely navigate in the real-world environment has become for active study. However, for robots to effectively interact with people, they will need to exhibit socially-appropriate behavior as well...

(more) Project Dates: 2016-03-01-

Description: Real-world tasks are not only a series of sequential steps, but typically exhibit a combination of multiple types of constraints. These tasks pose significant challenges, as enumerating all the possible ways in which the task can be performed can lead to large representations and it is difficult to keep track of the task constraints during execution. Previously we developed an architecture that provides a compact encoding of such tasks and validated it in a single robot domain. We recently extended this architecture to address the problem of representing and executing tasks in a collaborative multi-robot setting...

(more) Project Dates: 2014-01-01-

Description: Robotics represents one of the key areas for economic development and job growth in the US. However, the current training paradigm for robotics instruction relies on primarily graduate study. Some advanced undergraduate courses may be available, but students typically have access to at most one or two of these courses. The result of this configuration is that students need several years beyond an undergraduate degree to gain mastery of robotics in the academic setting...

(more) Project Dates: 2013-07-01-

Description: SAR envisions robots acting in very human-like roles, such as coach, therapist, or partner. This is a sharp contrast from manufacturing or office assistant roles that are more well-understood. In some cases, we can use human-human interaction as a guide for HRI; in others, human-computer interaction can be a suitable guide. However, there may be cases where neither human-human nor human-computer interaction provide a sufficient framework to envision how people may react to a robot over a long term...

(more) Project Dates: 2013-07-01-

Description: The goal of this project is to investigate how human-robot interaction is best structured for everyday interaction. Possible scenarios include work, home, and other care settings where socially assistive robotics may be used as educational aids, provide aid for persons with disabilities, and act as therapeutic aids for children with developmental disorders. In order for robots to be integrated smoothly into our daily lives, a much clearer understanding of fundamental human-robot interaction principles is required. Human-human interaction demonstrates how collaboration between humans and robots may occur...

(more) Project Dates: 2013-07-01-

Description: Scenarios that call for lt-HRI, such as home health care and cooperative work environments, have interesting social dynamics, even before robots are introduced. The success of human-only teams can be limited by the trust and willingness to collaborate within the team, consideration is worthy of factors that may affect adoption of robots in long-term settings. A coworker who is unwilling to collaborate and trust the abilities of their fellow teammate may refuse to include that teammate in many job related tasks due to territorial behaviors. Exclusion of a robotic team member might eventually lead to a lack of productivity in lt-HRI...

(more) Prior Research

Project Dates: 2014-08-01-2018-12-31

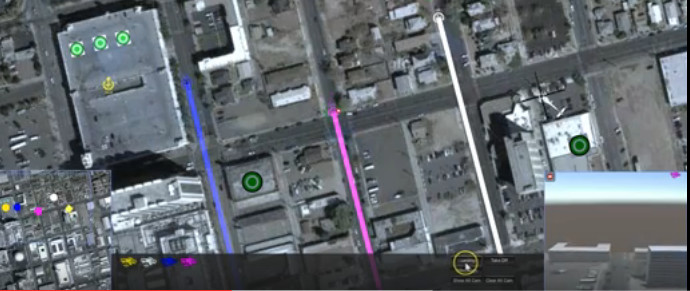

Description: In recent years robots have been deployed in numerous occasions to support disaster mitigation missions through exploration of areas that are either unreachable or potentially dangerous for human rescuers. The UNR Robotics Research Lab has recently teamed up with a number of academic, industry, and public entities with the goal of developing an operator interface for controlling unmanned autonomous system, including the UAV platforms to enhance the situational awareness, response time, and other operational capabilities of first responders during a disaster remediation mission. The First Responder Interface for Disaster Information (FRIDI) will include a computer-based interface for the ground control station (GCS) as well as the companion interface for portable devices such as tablets and cellular phones. Our user interface (UI) is designed with the goal of addressing the human-robot interaction challenges specific to law enforcement and emergency response operations, such as situational awareness and cognitive overload of the human operators...

(more) Project Dates: 2016-01-01-2018-01-01

Description: We are developing a disaster mitigation training simulator for emergency management personnel to develop skills tasking multi-UAV swarms for overwatch or delivery tasks. This simulator shows the City of Reno after a simulated earthquake and allows an operator to fly a simulated UAV swarm through this disaster zone to accomplish tasks to help mitigate the effects of the scenario. We are using this simulator to evaluate user interface design and to help train emergency management personnel in effective UAV operation.

(more) Project Dates: 2016-06-01-2017-11-01

Description: While it is increasingly common to have robots in real-world environments, many Human-Robot Interaction studies are conducted in laboratory settings. Evidence shows that laboratory settings have the potential to skew participants' feelings of safety. This project probes the consequences of this Safety Demand Characteristic and its impact on the field of Human-Robot Interaction.

(more) Project Dates: 2014-07-01-2016-12-31

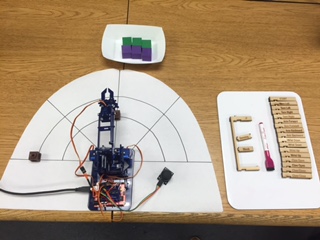

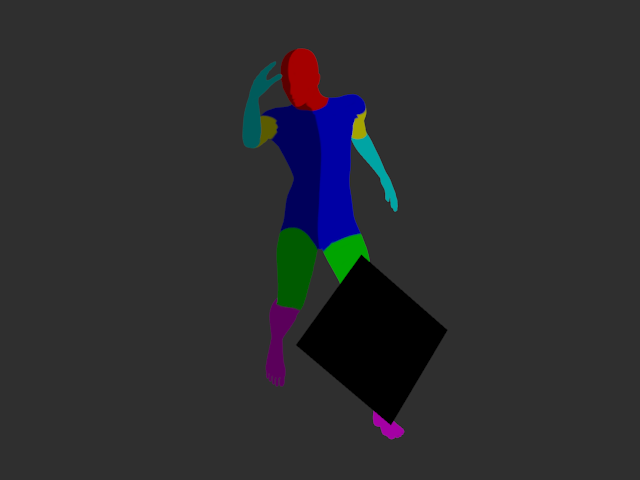

Description: Detecting humans is a common need in human-robot interaction. A robot working in proximity to people often needs to know where people are around it and may also need to know what the pose, i.e. location of arms, legs, of the person. Attempting to solve this problem with a monocular camera is difficult due to the high variability in the data; for example, differences in clothes and hair can easily confuse the system and so can differences in scene lighting...

(more)